With an iPhone in one pocket and an iPad in another, my shoulder bag allows me to work pretty much anywhere. I work in the car (Bluetooth headphone when I’m driving; iPad when my wife drives). I grab a free half-hour and knock items off my OmniFocus master task management system, which syncs, completely and reliably, the lists on my iPhone, iPad, and iMac. I read a lot of books and articles. I suppose my life runs toward busier-than-average. After a vacation week without email, and the use of iPad only to homebrew detours around nasty interstate backups, I’ve given some thought to the clear benefit of unitasking.

My auto-correct just underlined the word “unitasking” because the computer did not recognize it. I checked with Merriam Webster; my search for “unitasking” returned “the word you’ve entered is not in the dictionary.” When I checked “multitasking,” the first sense read: “the concurrent performance of several jobs by a computer” and the second read, “the performance of multiple tasks at one time.”

Google Search: “multitask” returned these and many other images.

Of course, computers and humans multitask all the time. Computers run multiple operations at high speeds, some simultaneously, some sequentially. As I write, I run my fingers along the keys and press buttons at surprisingly high speeds; I read what I am writing on the screen; and I think ahead to the next few words, and further out, to the next setup for the next idea; I am aware of grammar, clarity, flow and word choices; and I make corrections and adjustments along the way. As I write, I am also aware of the total word count, keeping my statements brief because I am writing for the internet (so far so good–my current word count is 279).

To ease the writing process, I listen to music. For six dollars, I recently purchased a 6-LP box of Bach Cantatas on the excellent Archiv label, and I am becoming familiar with them by playing the music in the background as I write. I do not find this distracting, but the moment the telephone rings when I’m in mid-thought, I become instantly grouchy.

I wonder why.

When I write, I focus on the writing, but writing is not a continuous process as, for example, hiking a mountain might be. I write for a few seconds, perhaps cast a phrase, then pause, listen to the soprano or the horns for a moment, then write a few sentences in burst of energy, then pause again to collect thoughts. I am thinking sequentially, not requiring my brain or body to do several things at one time. So far, this morning, the scheme seems to be working (441 words so far).

Do I multitask?

You betcha, but not when I write because writing requires so much of my focused attention.

Just before vacation, I attended a meeting with two dozen other people, all seated for discussion around a large rectangular hotel conference room in a Chicago airport hotel. Most of the participants were CEOs or people with similar responsibilities. From time to time, half of the people in the room were sufficiently engaged in the discussion to lift their eyes from their iPads (few used computers; most used iPads). Most of the time, just a few people were deeply engaged. Part of the problem: the meeting was similar to the meeting held last summer in the same location discussing the same topics. If there’s low engagement, then the active brain fills-in with activities promising higher degrees of engagement, and the iPad provides an irresistible alternative to real life. Of course, if more of the people looked up from their iPads and engaged in the conversation not-quite-happening in the room where they are seated, it might well be worthy of more of everyone’s attention. This raises the stakes for those who plan such meetings–if the meeting does not offer sufficient nourishment, minds drift.

Once again, that makes me wonder about the value of multitasking. Each of spent a good $1,000 to meet in Chicago, to discuss matters of importance in the company of one another. During the few times when the whole group was fully engaged, the engagement was not the result of multitasking. Instead, there was a single topic presented, and a single, well-managed discussion. In short, we engaged in a unitasking activity.

One final though before we all drift back into our bloated to-do lists. On vacation, I like to read a good story, well-crafted fiction by an author who really knows how to write. This time, I tried Jeffrey Lent’s A Peculiar Grace. Reading in the car, in the hotel room late into the night, whenever a good half hour’s free time presented itself, I found myself thinking about the characters, the story, the setting, the overall feeling of the book, even when I was not reading it. This was accomplished, of course, by the unitasking that a good book demands and deserves.

So why isn’t unitasking in the dictionary? And what do we need to do in order to bring this word into common use, and this way of thinking into common practice?

![(Copyright 2006 by Zelphics [Apple Bushel])](https://diginsider.com/wp-content/uploads/2013/03/apple_bushel.png?w=660)

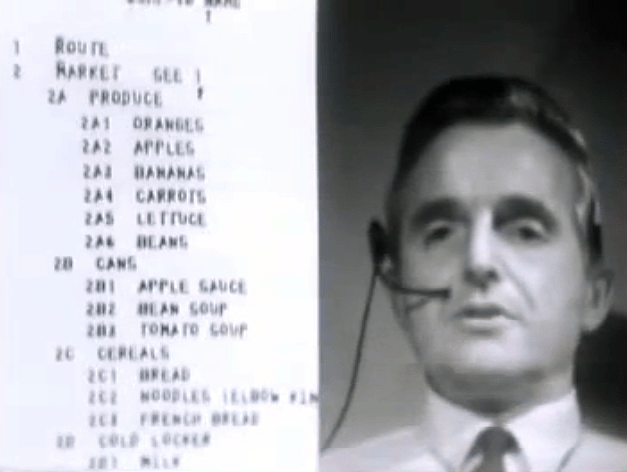

Much of Kurzweil’s theory grows from his advanced understanding of pattern recognition, the ways we construct digital processing systems, and the (often similar) ways that the neocortex seems to work (nobody is certain how the brain works, but we are gaining a lot of understanding as result of various biological and neurological mapping projects). A common grid structure seems to be shared by the digital and human brains. A tremendous number of pathways turn or or off, at very fast speeds, in order to enable processing, or thought. There is tremendous redundancy, as evidenced by patients who, after brain damage, are able to relearn but who place the new thinking in different (non-damaged) parts of the neocortex.

Much of Kurzweil’s theory grows from his advanced understanding of pattern recognition, the ways we construct digital processing systems, and the (often similar) ways that the neocortex seems to work (nobody is certain how the brain works, but we are gaining a lot of understanding as result of various biological and neurological mapping projects). A common grid structure seems to be shared by the digital and human brains. A tremendous number of pathways turn or or off, at very fast speeds, in order to enable processing, or thought. There is tremendous redundancy, as evidenced by patients who, after brain damage, are able to relearn but who place the new thinking in different (non-damaged) parts of the neocortex.